The world of broadcast and production can be a minefield of contradictory challenges. Reduce bit rates but maintain visual quality; apply precise subtitles but broadcast in real time; create highlight content but transmit it immediately after the main event. According to Simon Forrest, principal technology analyst at Futuresource Consulting, these and many more headaches can be alleviated by the rise of artificial intelligence (AI) and machine learning technologies.

AI broadcast solutions

“Machine learning is beginning to impact on the broadcast solutions market, unlocking a range of opportunities for the industry,” says Simon Forrest, principal technology analyst at Futuresource Consulting. “One of the most notable, but perhaps lesser-known areas is the application of AI to video encoding technology. Machine learning techniques are employed during video encode to reduce file sizes and bit rates whilst maintaining visual quality.”

A reduction in bit rates leads to significant cost savings in network bandwidth and delivery; indeed, the more efficiently a broadcaster or OTT service provider uses bandwidth, the more profitable it can be. Forrest explains further: “Machine learning allows encoders to optimise video encode parameters on a scene-by-scene basis, whilst the learning itself is fed back into the system to enhance future encoding sessions. This can also speed up encoding times. The feedback loop ensures that the AI applies better encode parameters in subsequent sessions, which over time approach the optimum compression for a given scene.”

Looking to closed captioning or subtitles, this is another area where machine learning techniques can be applied, according to Forrest. Using algorithms that have been guided through massive language datasets, speech can be translated into text in real time and automatically applied to broadcast assets. In addition, AI can also be engineered to loosely identify the context of the speech. Moving forward, future iterations may use intonation and inflexion to further improve accuracy rates.

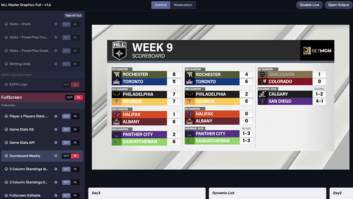

When it comes to highlight content or showreels, companies like Aspera are already employing machine learning techniques to automatically search video for specific content, both audio and visual. Once indexed, portions of the video can be stitched together to produce highlights which are made available to programme editors. For example, goals during a football game may be discovered by identifying video sequences where the goalmouth is present in the scene and the crowd are cheering.

AI in the video delivery chain

AI is now possible at the edge, on consumer electronics devices such as media players, smart TVs and set-top boxes. Semiconductor firms are developing the next generation of video decode chips that are capable of AI at the edge, and this enables AI to be employed in the personalisation of video services. Forrest explains: “Usually, a consumer would need to log in to a service and identify content of interest; so, credentials must be exchanged and a profile of the user must reside on a server somewhere in the Cloud in order to tailor the services for them individually,” he says. “Using AI at the edge, it’s possible to recognise users locally either via voice, fingerprint, or via a camera.”

Users are distinguished through a simple identifier stored on the device rather than via log-in credentials held in the Cloud. He explains: “Basically, the system simply learns that family member number four likes films, whereas number two prefers documentaries, and the video service is tailored appropriately dependent upon which viewer is identified locally by the AI in the chip. No data needs leave the device itself, leading to improved security and increased privacy for the consumer.”

The future of machine learning

“It’s clear that machine learning and AI technology is being actively pursued in the video industry; indeed, companies are already reaping the benefits,” says Forrest. Broadcasters benefit from being able to quickly categorise and index video assets through the application of machine learning; OTT providers harness the advantages in AI-based encode technology to reduce the size of video assets and increase ROI on their streaming services; consumers benefit by being able to create their own video highlights based upon their own preferences processed by AI in the Cloud, and very soon at the edge in consumer electronics devices themselves.

“Nevertheless, AI is still a nascent technology with many developments yet to come”, says Forrest. “Machine learning will be largely invisible to end consumers but they will benefit from the outputs of the technology, be this improved video quality or lower service costs, or perhaps an ability to watch UHD content on lower bandwidth networks because of the improvements in video encoding. A classic example comes from the smartphone industry, where AI delivers outstanding images from the camera using AI-based computational photography.” Research from Futuresource finds that over 85 per cent of consumers don’t know that AI is being used to enhance photos and videos captured by their smartphone; they just know they have a better camera.

Development will continue and AI will improve further with enhanced training sets and advances in computational performance, both in the Cloud and at the edge. “Research institutions are already investigating the undesirable consequences of AI, including such things as bias where imperfect or skewed training sets can expressly influence the output from AI-based algorithms”, says Forrest. “There are also the ethics and societal impacts that AI could have if used in harmful ways. Recently there was research published that employed machine learning to predict the next word in a sentence having been seeded with only a small excerpt of text. The results were spectacular: the AI produced entire articles that could be misinterpreted as being written by humans. This is not just ‘fake news’; it has the potential to pollute the entire evolution of human knowledge.”

But it’s worth remembering that presently we have little more than a perception of intelligence; these are simply complex algorithms running on neural networks. Machines have a long way to go before they are capable of cognitive reasoning, empathy, self-awareness and a reasonable understanding of the world around them.

This article first appeared in TVBEurope’s 2019 MediaTech Outlook. Download the ebook here.